The Open Systems Interconnection (OSI) model is a concept for standardizing the communication functions of a computing or telecoms system, regardless of the system architecture or technology. It aims to make different communications systems interoperable by enforcing a common communication protocol.

The OSI model was the first standard model for network communications, introduced in 1983 by the major computer and telecom companies of the time. It was adopted by the ISO as an international standard in 1984, and has since been used to visualize how different networks operate and communicate.

In effect, the OSI model can be regarded as a type of universal language for computer networks.

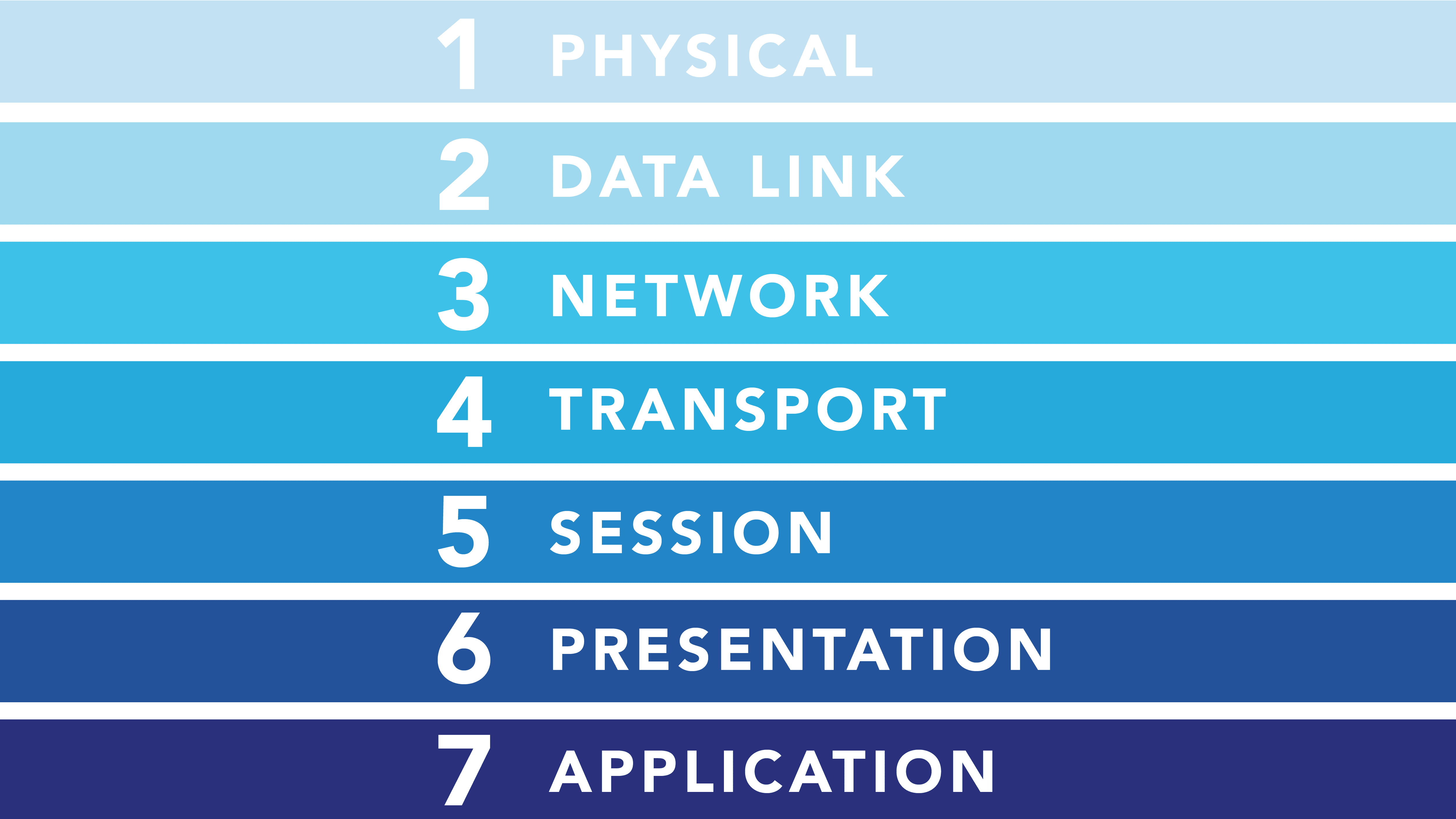

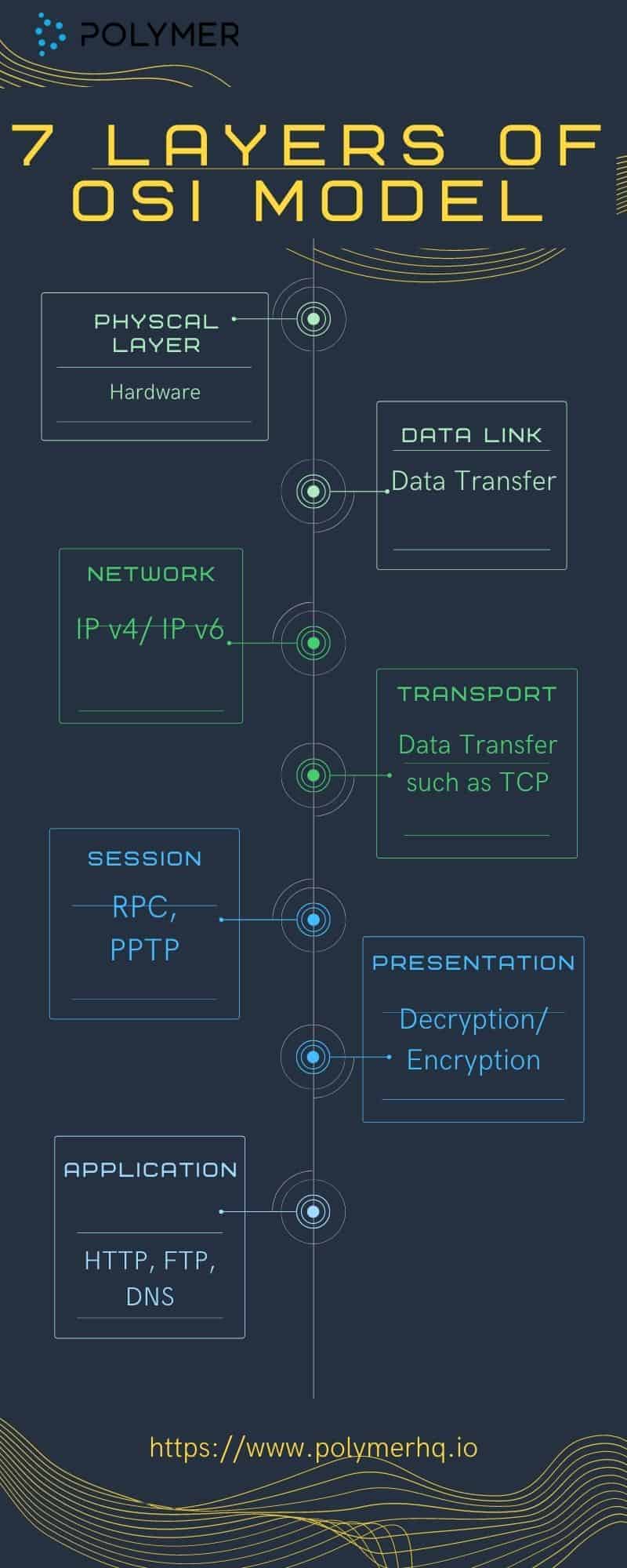

What are the 7 layers of the OSI model?

The model centers around seven, inter-connected layers. Each layer flows into another, starting at the source device, then across the network and finally through the remaining layers of the receiver system, until it reaches the seventh layer.

Below, we look at each layer in more detail, starting from the bottom:

1. Physical Layer: Like the name states, this layer centers on the physical equipment needed to create data transfers, such as electric cables. Here, raw data is turned into a sequence of 1s and 0s – also known as a bitstream.

2. Data Link: Here, two physically connected network nodes ensure reliable data transfer. There are two segments to this layer: the Logical Link Control (LLC) and Media Access Control (MAC). The LLC’s objective is to manage multiplexing, flow control, and monitor if the layers above are transmitting and receiving data correctly. MAC’s purpose is to supervise data frames that have MAC addresses on the sending and receiving hardware.

3. Network: At the network layer, the objective is to assemble data so that it is ready to transfer in and through other networks. This involves curating data into transferrable packets, choosing the right network addresses, and sending the packeted data to the next layer using internet protocols. Examples of this layer include bridge routers and protocols like Internet (IPv4) Protocol version 4 and Internet Protocol version 6 (IPv6).

4. Transport: The purpose of this layer is to transfer data between end systems. Here, flow control is used to make sure data is sent at the right rate and to troubleshoot send/receive errors. A common example of this layer is the Transmission Control Protocol (TCP).

5. Session: To share information between devices, this layer sets up a session for computers or servers to have a dialogue. This layer is responsible for the entire session: set-up, coordination, and termination. Well-known session layer protocols include the remote procedure call protocol (RPC) and Point-to-Point Tunneling Protocol (PPTP).

6. Presentation: Think of this layer as the translator. If each network speaks its own language of data, then it’s this layer’s job to decode that data so that it is correctly portrayed. This involves encryption and decryption, encoding and compression.

7. Application: This is the ‘human’ layer of the model that enables data communication for end-users, via mediums like web browsers and email. This layer is responsible for the protocols that facilitate software to show users meaningful data. Common examples include the Hypertext Transfer Protocol (HTTP), File Transfer Protocol (FTP) and Domain Name System (DNS).

As you’ll see above, these seven layers group and standardize protocols according to network functionality. So, as a whole, the model facilitates interoperability between different systems and is agnostic of models and vendors.

Where do cross-layer elements come in?

Cross-layer functions are services that involve more than one layer. Cross-layer functions tend to center around three principles: security, reliability and availability. For instance, management and security are not tied to a specific layer and can involve all seven. Common examples include:

- Security service, as defined by ITU-T X.800 recommendation, which safeguards data transfers.

- Management functions including configuration, instantiation, monitoring and terminating of connections between devices.

- Multiprotocol Label Switching (MPLS), which sits between layer 2 and layer 3 of the OSI model. This carries multiple forms of traffic, including IP packets, SONET, and Ethernet frames.

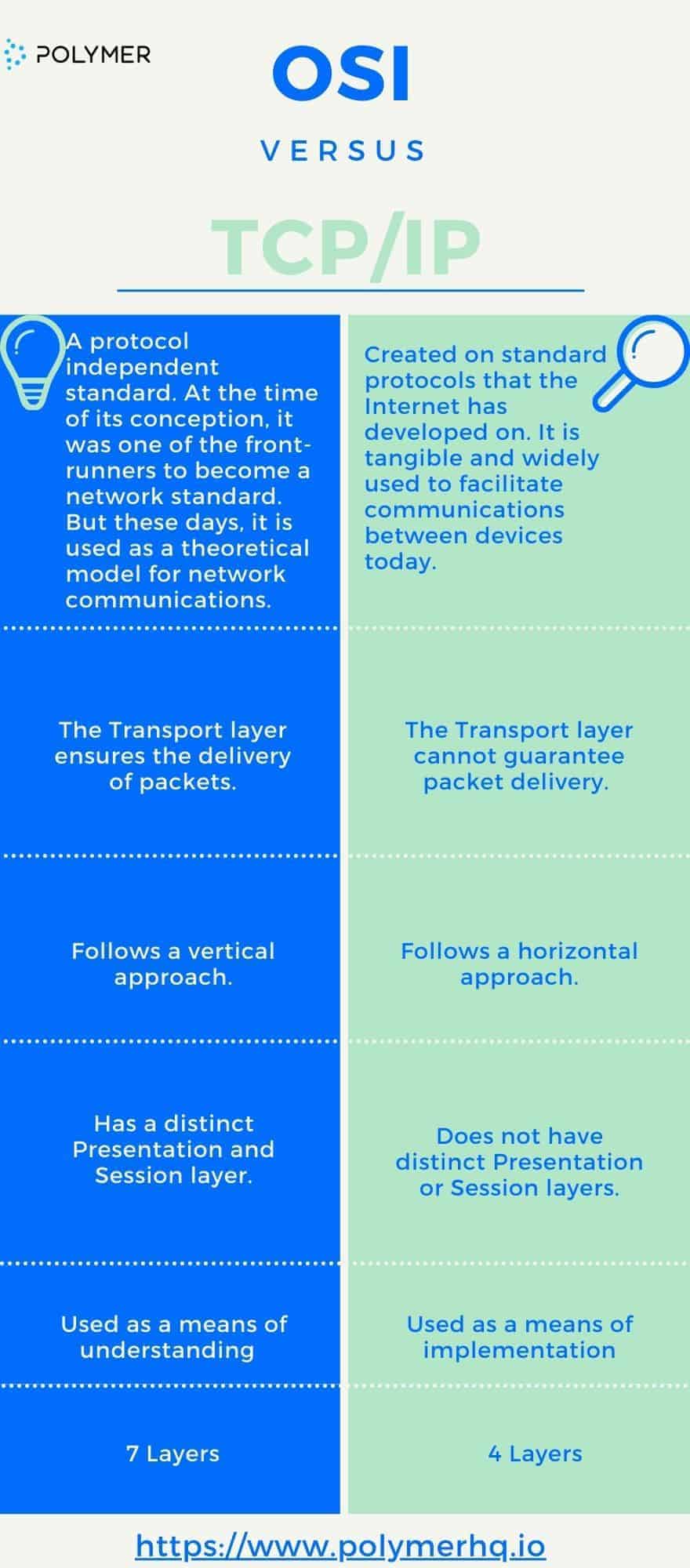

OSI vs TCP/IP Model

Why It’s important to know the OSI Layers

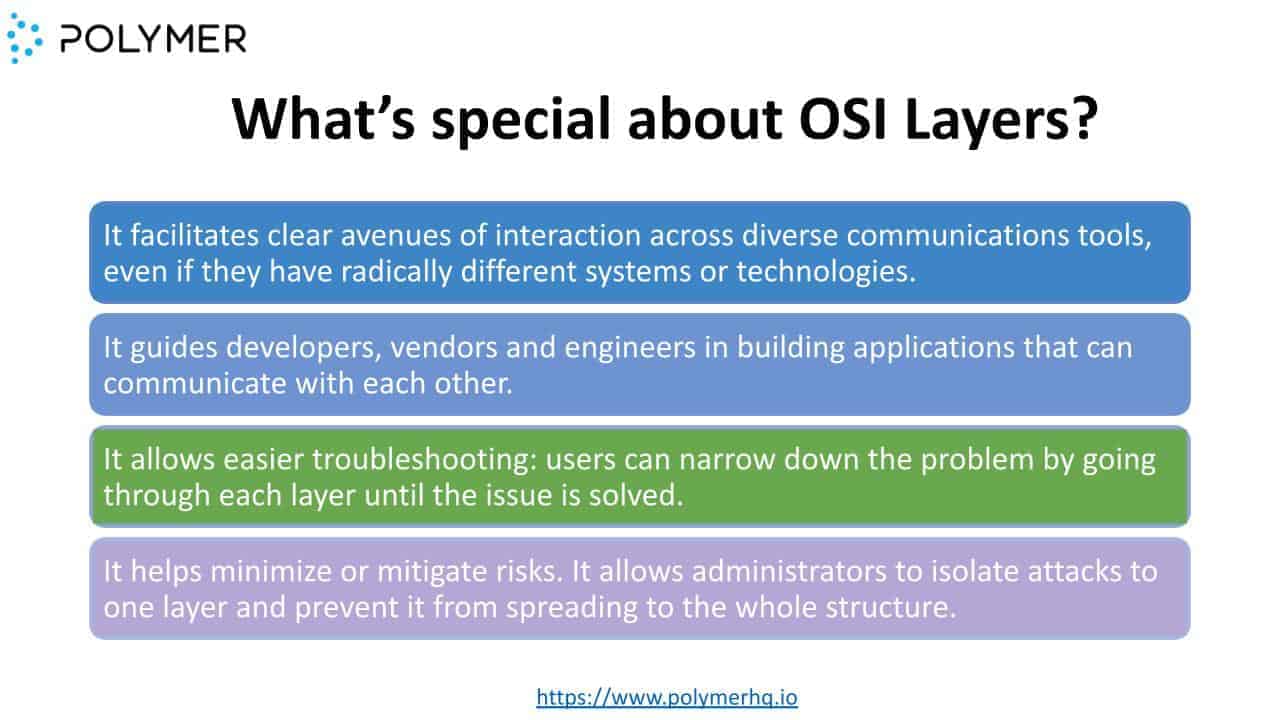

Despite nearly being four decades old, it’s useful to understand the OSI model for the following reasons:

- It facilitates clear avenues of interaction across diverse communications tools, even if they have radically different systems or technologies.

- It guides developers, vendors and engineers in building applications that can communicate with each other.

- It allows easier troubleshooting: users can narrow down the problem by going through each layer until the issue is solved.

- It helps minimize or mitigate risks. It allows administrators to isolate attacks to one layer and prevent it from spreading to the whole structure.

Is the OSI model still relevant today?

In 2019, a group of IT and networking specialists called the 451 Alliance famously declared the OSI model obsolete. The reasons they gave are:

- The 4-layer Internet model is more flexible and adaptable to today’s technologies.

- Newer models emphasize integration of new components, vs. OSI’s focus on a full stack of platforms. This makes them more efficient, convenient and cost-effective.

- TCP/IP has a more flexible architecture, unlike the rigid structure of OSI where layers are highly dependent upon each other. With OSI, a failure in a lower layer can greatly impact the functions of higher layers.

The 451 Alliance is not alone in its criticisms. The OSI model appears to divide the IT and Infosec community. It’s been called overly complex, impractical and out-of-date for modern networks.

However, despite the impracticalities of applying the OSI model in real life, it still has relevance today. From a cybersecurity standpoint, it is an extremely useful tool for cybersecurity professionals. Over the years, the model has acted as a point of reference for millions of professionals discussing networks and data security.

Exploring new alternatives to the OSI model

In light of cloud technology, the OSI model is being challenged further. With services like AWS and Azure, the layers of control and access are blurred, further complicating the data security puzzle.

To remedy this, some IT professionals are putting forward new OSI models, which are more suitable for modern connectivity requirements. One example is the five-layer OSI model. In this concept, layer 1 (Physical infrastructure) and layer 2 (Hypervisor) are in the hands of the cloud provider. From there, there is layer 3 (the Software Defined Data Centre), where organizations manage data security, access controls and redundancy. Layer 4 (The Native Service) is where workloads are created and data is stored. Lastly, there is layer 5, which is similar to the Application/Level 7 of OSI.

Cisco has proposed a similar concept – but with 6 layers. In this standard, a new layer – Services – has been sandwiched between the above 4 and 5. Services takes into account middleware elements such as databases, web servers and email services.

While neither concept has been solidified, the goal – like the traditional OSI – is to improve IT personnel’s understanding of the different levels of the stack, so that they can better manage and secure data.

Where does DLP fit in?

If you don’t know where your data is, it’s impossible to adequately secure it. With OSI, IT and security teams can visualize the data journey, enabling them to better manage data security risks and conduct asset inventories. This is because the model provides a framework for understanding how and where data is used throughout the network communications process. By knowing where your data resides – be it on premises or the cloud – you can create better information security policies to manage risk. This, in turn, helps you to deploy the right security tools to improve data visibility. This is where DLP comes in.

Today, it’s likely that most – if not all – of your sensitive data is travelling in and out of SaaS applications. You will therefore need a dynamic DLP solution, like Polymer, that can monitor, secure and redact sensitive data across your collaboration apps. This visibility, and tailored management of data across all layers of the stack, is pivotal both to prevent data breaches and to ensure compliance with regulations like HIPAA, CCPA, PII, PHI, GDPR.

So, while the OSI model might not have real word applications today, it is certainly a helpful, logical framework for defining and implementing data loss prevention requirements.